SLMs vs LLMs: A Student’s Guide to Learning Small and Large Language Models in AI

Introduction

Artificial Intelligence (AI) and Machine Learning (ML) are developing rapidly, and one of their most exciting developments is language models, which are the topics behind the AI chatbots, virtual assistants, and tools we use on a daily basis in the field.

In the experiences I oversee in AI learning, students regularly ask: should I learn Small Language Models (SLMs) or Large Language Models (LLMs)? It is especially relevant question in a practical sense, because they both have their own characteristics strengths and weaknesses. As someone who has worked in AI for couple of years, I can say with confidence that understanding the difference between the two, is very important in developing skills that are relevant in the real world.

When I train students through AI & Machine Learning Course, I always prioritize articulating how SLMs and LLMs each provide different and effective purposes and why learning about both means better opportunities in education, research and career.

What Are Language Models?

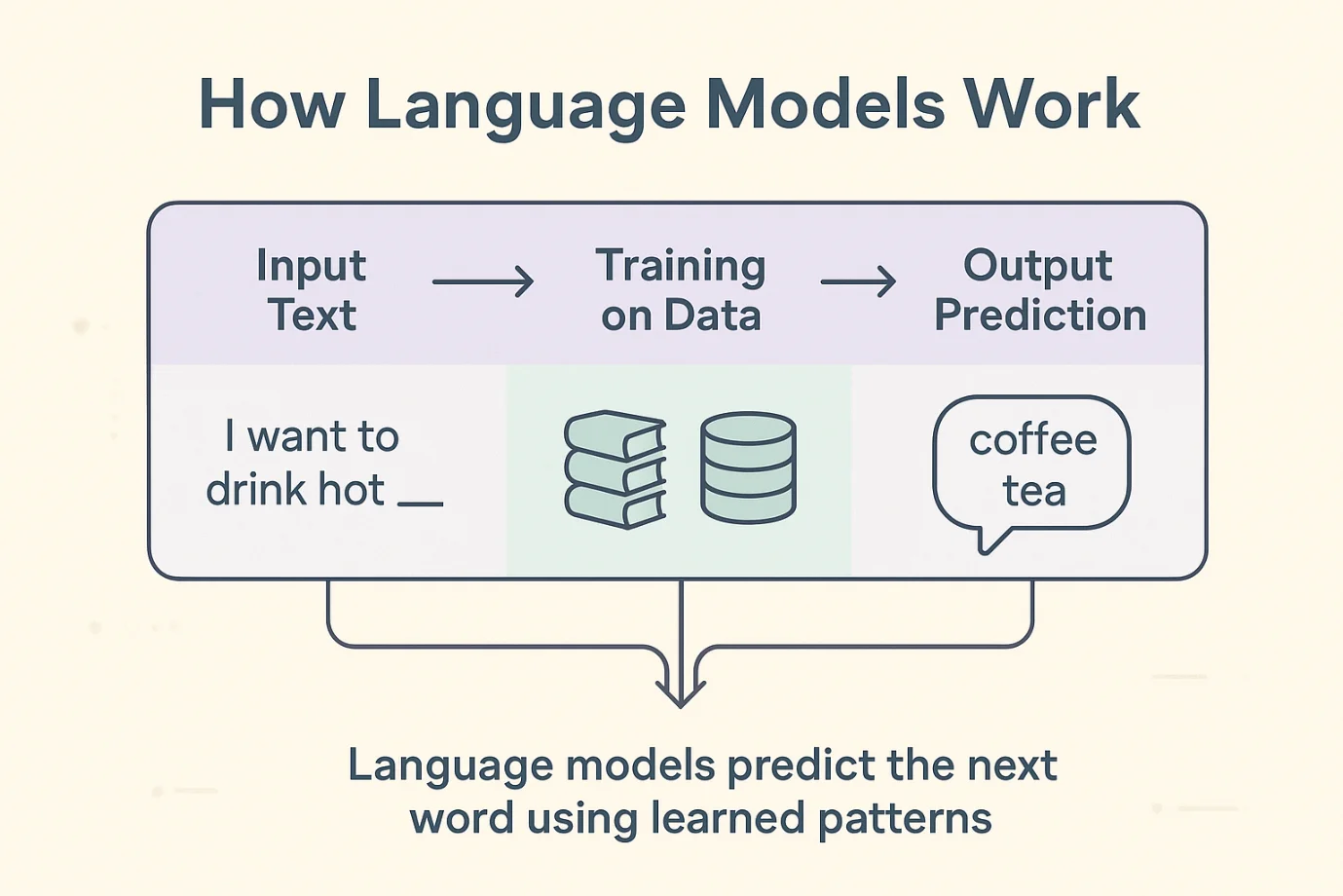

Language model is an AI. When we say it’s AI we are conveying that it’s computer program designed for understanding, processing, and generating human language.

Essentially it’s the same as training a computer to predict the next word in a sequence based on what came before it.

If you write “I want to drink hot ___” The model might say, “coffee” or “tea”.

Training a Language Model

Language models are trained on large sets of text: books, website content, articles, conversations and so on. They learn grammar, word meanings, writing rules, facts, and even reasonable patterns in responses. Eventually, the model gets so good at answering questions, writing essays, or writing code and more.

Impact on AI & ML Applications

Language models are used in countless applications:

- Chatbots for customer service situations.

- Google Translate for translating languages.

- Virtual assistants like Siri/Alexa.

- AI tools that can write code, blogs, or reports

From my experience: In the early 2010s, chatbots could handle prompt and scripted answers. Compare that to today language model abilities and they can hold a natural flowing conversation. The evolution in capabilities of the models makes them so powerful.

Large Language Models (LLMs)

Large Language Models (LLMs) are cutting-edge AI systems that contain billions, to hundreds of billions of parameters. Parameters are like internal “settings” in the model, which allow it to make sense of language. The model’s ability to hold so many parameters makes LLMs a powerful research tool, but it is also resource-heavy.

Examples

- GPT (OpenAI)

- LLaMA (Meta)

- Claude (Anthropic)

- PaLM (Google)

Strengths of LLMs

- Advanced reasoning: LLMs can complete complex math problems, code a small project, and even explain scientific theories.

- Creativity: They can create articles, essays, marketing content, and even poetry.

- Multi-domain expertise: Because of the enormous quantity of data they are trained on, they can explain history, medicine, finance, and beyond.

Limitations of LLMs

- High cost: Training and serving LLMs, and costs many millions of dollars.

- Infrastructure: LLMs use many resources in terms of needed GPU, TPU or cloud cluster.

- Slower inference: They are slower than smaller models due to the size of the model.

Small Language Models (SLMs)

Small Language Models are AI systems that contain fewer parameters—usually in the millions to a few billion. Parameters are the internal parts that give potential for a model to learn language patterns. Because SLMs have far fewer parameters than LLMs, they are lighter, faster, use resources more efficiently.

SLMs are generally not as heavily trained on massive broad datasets; instead they may be trained on smaller datasets or domain-specific datasets. This gives them excellent performance on more focused tasks while not relying on massive computational power.

Examples

- Phi-3 (Microsoft)

- Mistral 7B

- Gemma (Google)

Key Strengths

- Accessibility: They can operate seamlessly on typical laptops or desktops, which makes them accessible.

- Speed: They handle quicker than LLMs due to being smaller.

- Cost: Running and fine-tuning them do not require an expensive GPU or cloud run.

- Fine-tuning: Even based on small datasets, SLMs can be fine-tuned for unique tasks like chatbots, customer support tools, or domain subject tutors.

Limitations

- Knowledge highlighted in data: Due to their smaller data sets, they will not be “aware” as much as LLMs.

- Problem-solving ability: They tend to struggle more with advanced problem solving or critical thinking, as well as multi-step reasoning tasks.

- Context: They are able to handle actual conversations or shorter text inputs. Whereas LLMs are able to handle long and complex conversations.

Analogy: If an LLM is a large library with a full set of encyclopedias about everything, an SLM, is like a focused study guide—with a much smaller set of information (and all the information that is relevant), much smaller (physical footprint), much more practical for everyday use, and we can take it with us too!

From my experience: I helped a small retail business to develop a chatbot using Mistral 7B, that answered more than 50 common customer questions, and it ran on their office computer without any issues; they never needed expensive servers.

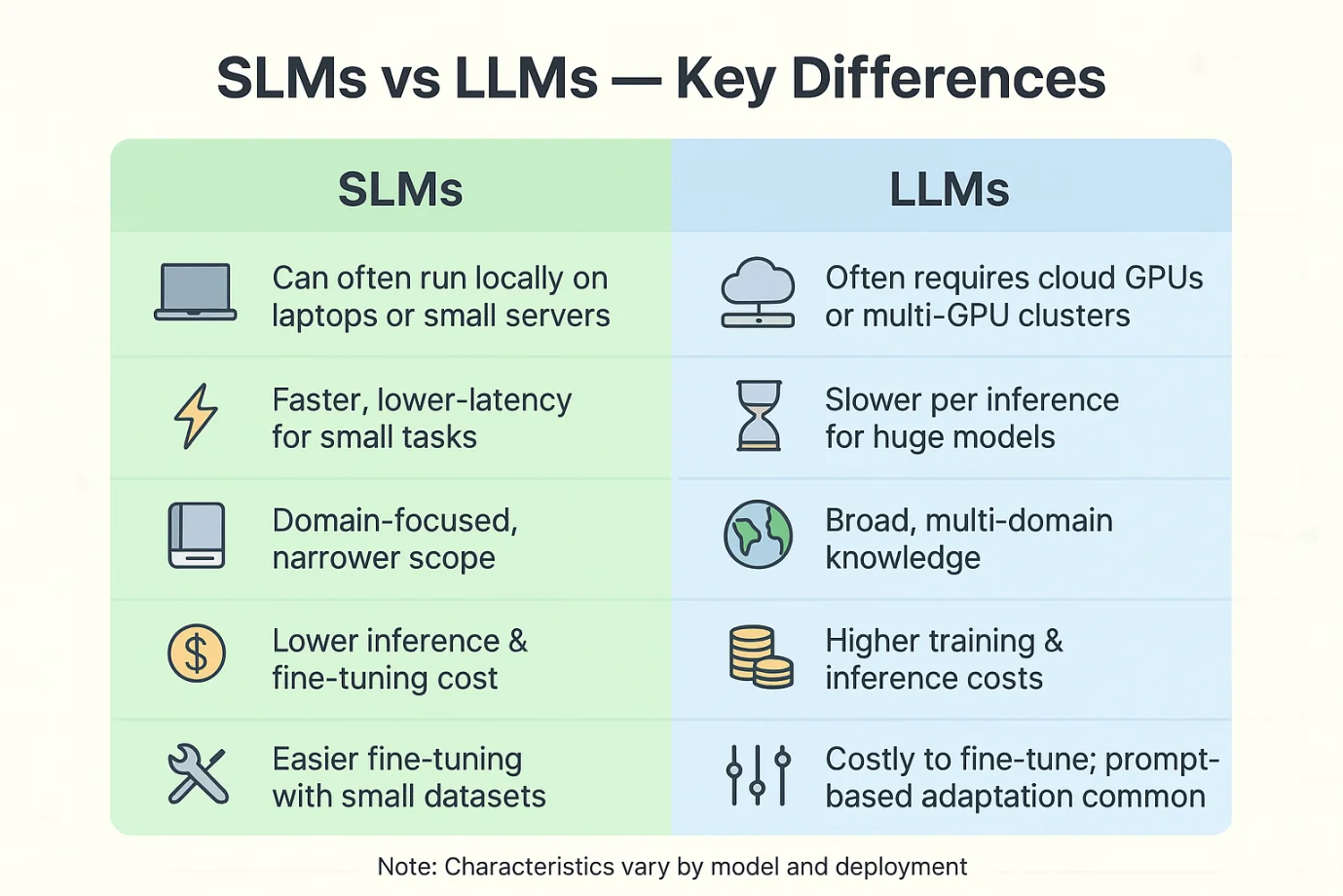

SLMs vs LLMs: Important Differences

| Feature | Small Language Models (SLMs) | Large Language Models (LLMs) |

| Size (parameters) | Millions to a few billion | Billions to hundreds of billions |

| Cost to run | Very low (can run on laptops) | Very high (needs GPUs or cloud) |

| Training data | Smaller, domain-focused | Massive, multi-domain datasets |

| Speed & efficiency | Fast, lightweight | Slower, heavy computation load |

| Knowledge coverage | Narrow, specific topics | Broad, multi-domain knowledge |

| Accuracy | Good for simple/medium tasks | High accuracy in complex reasoning |

| Memory needs | 8–16 GB RAM (runs locally) | 64–128 GB RAM or cloud clusters |

| Use cases | Chatbots, education, small business apps | Enterprise AI, research, advanced assistants |

| Customization | Easy, needs little data | Costly, needs large datasets |

| Energy usage | Low (eco-friendly) | Very high (big footprint) |

| Accessibility | Beginner/student friendly | Often limited by API access |

| Deployment | Local or small servers | Requires cloud infrastructure |

| Examples | Phi-3, Mistral 7B, Gemma | GPT-4, LLaMA, Claude, PaLM |

Importance of SLMs for Students

The perception students have of AI and LLMs is that they must have supercomputers before they can engage in AI. This is not true, and where SLMs are useful.

- Learning is possible: SLMs have small footprints and can run on a standard laptop, or free platforms such as Google Colab. This means, that the your average student can experiment without worrying about large expenses like GPUs or cloud subscriptions.

- Hands-on learning experiences: Students can download free and open-sourced SLMs on Hugging Face and can build projects today. They can use SLMs to create chatbots, text summarizers, or even maybe a personal voice assistant without worrying about how much it costs and how much time it takes to get started.

- Cost effective real-world projects: Can you imagine being a student and building a project for their finals? An LLM could easily cost you thousands of rupees/dollars to train. You could fine-tune a small SLM on the university library system or work with local businesses to automate the “frequently asked questions”.

Based on my teaching experiences

Usually, in working with new learners, I always recommend they begin their exploration with SLMs as that helped to minimize their fear of “not enough computing power”. I always notice the excitement and joy in students as they see their AI model answering their own questions, as it builds confidence.

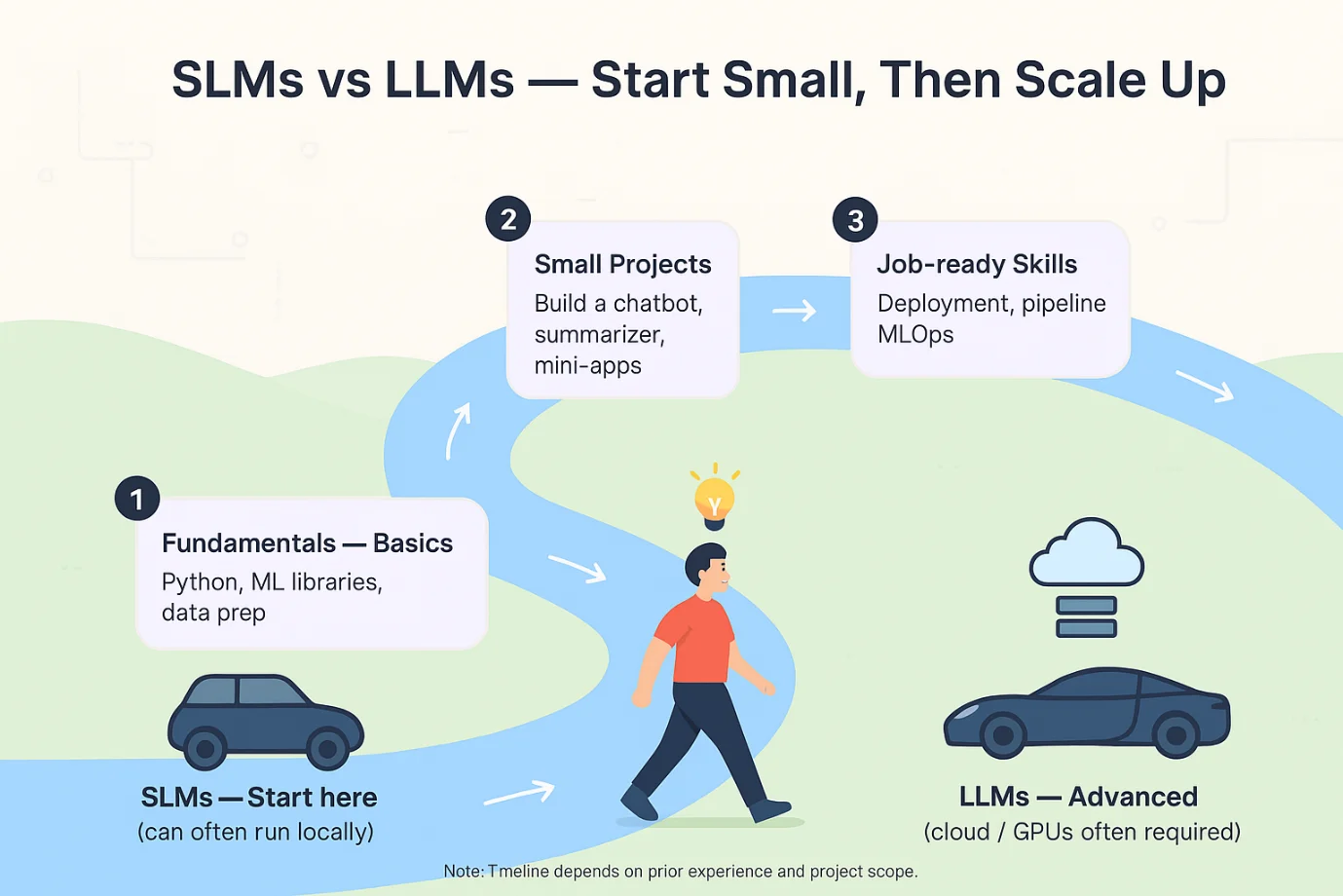

When to Learn SLMs vs LLMs

Students frequently ask me the question, “Should I just learn larger models like GPT and skip SLMs, as we all know they’re better?” My answer is always the same – start small, then go big:

- When You are a Beginner: Start with SLMs: There is no better model for you than an SLM. You will learn machine learning fundamentals, fine-tuning, and deployment all without the burden of extreme costs, complicated infrastructure, or unforeseen issues.

- When You Want You Meet Job Demand: Learn Both: Plenty of companies use SLMs for lightweight and cost-effective applications (like customer support chatbots or e-commerce assistants). However, those same companies, also large tech firms and research facilities are relying more on LLMs due to the benefits which come with larger models and advanced AI solutions. Employers want candidates who have been exposed to both types of models.

- How They are Interrelated: Think of an SLM as your car and LLM as your sports car. You do not need a Ferrari to learn how to drive – you learn with a very basic car, get your driving experience, and eventually drive sports cars. Understanding SLM will give you the skills to handle LLM if you intend on using it in the future.

Real-world example: I mentored a student who built a customer support chatbot that used an SLM (Mistral 7B) for a local store. Later, they used GPT-4 during an internship to automate summarization of medical reports. Because they had used smaller models before, they were able to quickly adapt to using LLMs.

Practical Training Tips

If you are ready to start getting your hands on actual language models here’s how to get going:

Tools & Frameworks

- Hugging Face – The best place to find access to many pre-trained small models.

- PyTorch or TensorFlow – Two libraries for training and experimentation.

- Google Colab – This is a free option for something similar to notebooks for collaborative cloud execution that has GPU enabled resources.

Start Projects with SLMs

- Make a chatbot for your college website.

- Develop a tool to summarize your class lecture notes.

- Build a simple translation app to translate small phrases.

Transitioning to LLMs

- When you are EXCITED and CONFIDENT about projects, experiment with APIs such as OpenAI’s GPT as well as fine-tune bigger open-source models.

- Experiment with hybrid projects — use an SLM for execution speed and combine it with an LLM for complex or sophisticated tasks.

Pro tip from experience: It is better to start with something small but useful, such as a text summarization app for your own lecture notes. You will learn more quickly when the project actively resolves a challenge you are confronted with in your daily life.

The Future of Language Models in Education

- Rise of specialized small models: SLMs are increasing developed for areas such as healthcare, finance and education

- Universities are adapting: Many training programs are utilizing both SLMs and LLMs to provide balanced exposure

- Why balance is important: Students who understand both will have a better advantage going forward into the job market.

Conclusion

SLMs and LLMs are not enemies. They are two sides of the same coin. For students SLMs are the best place to start, they are inexpensive, quick, and useful. You can always go into the depth and scale of LLMs as you progress.

Let me conclude with my advice, after many years in the domain. My advice is simple: start small, learn by doing, then scale to larger models. That is the best way to build the fundamental knowledge about AI & Machine Learning.